The problems with translation

There's an inefficiency at the heart of modern product development that i've spent a long time thinking about. We've normalized a process where designers create visual designs in one set of tools, then developers recreate those same interfaces in code. This isn't collaboration—it's translation. While skilled teams can reduce the gaps, translation inherently introduces friction and often results in subtle but meaningful differences between vision and implementation.

I've spent years watching beautiful designs degrade through a series of well-intentioned handoffs. What starts as a fluid, cohesive experience in Figma often arrives in production with subtle but important differences. Animations feel less polished. Spacing becomes inconsistent. Typography loses its rhythm. Colors shift imperceptibly.

None of these compromises are catastrophic individually, but collectively they create a significant gap between design and reality. These aren't bugs in the traditional sense—they're translation errors.

The cost beyond pixels

This inefficiency goes deeper than visual fidelity. It creates a cycle that drains creative energy and builds organizational friction:

Designers create prototypes. Developers recreate them in code. QA compares implementation against design. Designers request adjustments. The cycle repeats—burning time, increasing frustration, and fragmenting what should be a unified creative process.

What's bizarre isn't that we do this at all—specialization has obvious benefits—but that we've accepted it as necessary rather than questioning whether this entire paradigm is artificial? What if the wall between design and development isn't inevitable but merely an artifact of our tools?

We recently had our first onsite of the year at Uniswap, and as with all onsites we had a hackathon. As expected, AI was a primary feature of many of the projects folks worked on. For me and my coworker Fred Zaw, our exploration was around how we could create a circular workflow where design informs code, code enables experimentation, and those experiments feed directly back into design. Not a better handoff process—the elimination of handoffs entirely.

The vibecoding catalyst

A few weeks ago, I came across Fred's vibecoding experiment and it immediately resonated with challenges I'd been thinking about for years. Fred was exploring whether designing directly in code could replace Figma—not as a theoretical exercise, but with actual production work.

So while I don't think I'll be removing Figma from my process entirely for the reasons stated above, I see myself using these methods to compliment one another, significantly cutting down on my time spent in Figma. —Fred Zaw

His conclusion was nuanced—vibecoding wasn't a complete replacement for tools like Figma, but it dramatically reduced the gap between concept and implementation. The post was a wonderful articulation of something I've been noodling on for years: what if the divide between prototype and production isn't inevitable?

I dm'ed Fred and asked if he wanted to jam on something for our upcoming hackathon. He was in, and we decided to take this concept further, seeing what we could build across our entire product suite of web app, browser extension, and React Native app.

What should we be asking?

The question isn't whether designers should code or developers should design. It's whether the distinction between design artifacts and implementation artifacts needs to be so stark. The real question is:

The traditional product development cycle has treated prototype code as disposable: a visual approximation to be recreated "properly" later. This approach persists largely because of historical limitations rather than inherent necessity.

Every translation between mediums creates opportunities for design intent to be lost. A designer's precise taste and vision becomes an engineer's approximation, not because of skill gaps, but because traditional workflows force multiple interpretations of the same idea.

At Uniswap, we already have a well-structured cross-platform component system using Tamagui. This provides a solid foundation, but we wanted to explore how newer approaches might further reduce this gap, especially with what AI assisted coding (aka vibecoding) now brings to the table.

From translation to cyclical feedback loop

Most attempts to improve design → development workflows focus on making handoffs less painful. Better documentation. Automated style guides. Pixel-perfect comparison tools. All of these approaches accept the fundamental premise: design happens in one place, development happens in another, and there will always be a potentially lossy translation between them.

What if we rejected that premise entirely?

What if design and implementation were just two views of the same underlying artifact? What if a change in one automatically and losslessly updated the other? What if we could create a circular flow where design informs code and code feeds back into design? This is the dream we've been chasing for years.

During our hackathon, we built a prototype that demonstrates the beginnings of this kind of circular workflow. And it's changing how we think about the entire design-development process.

A new shared language of components

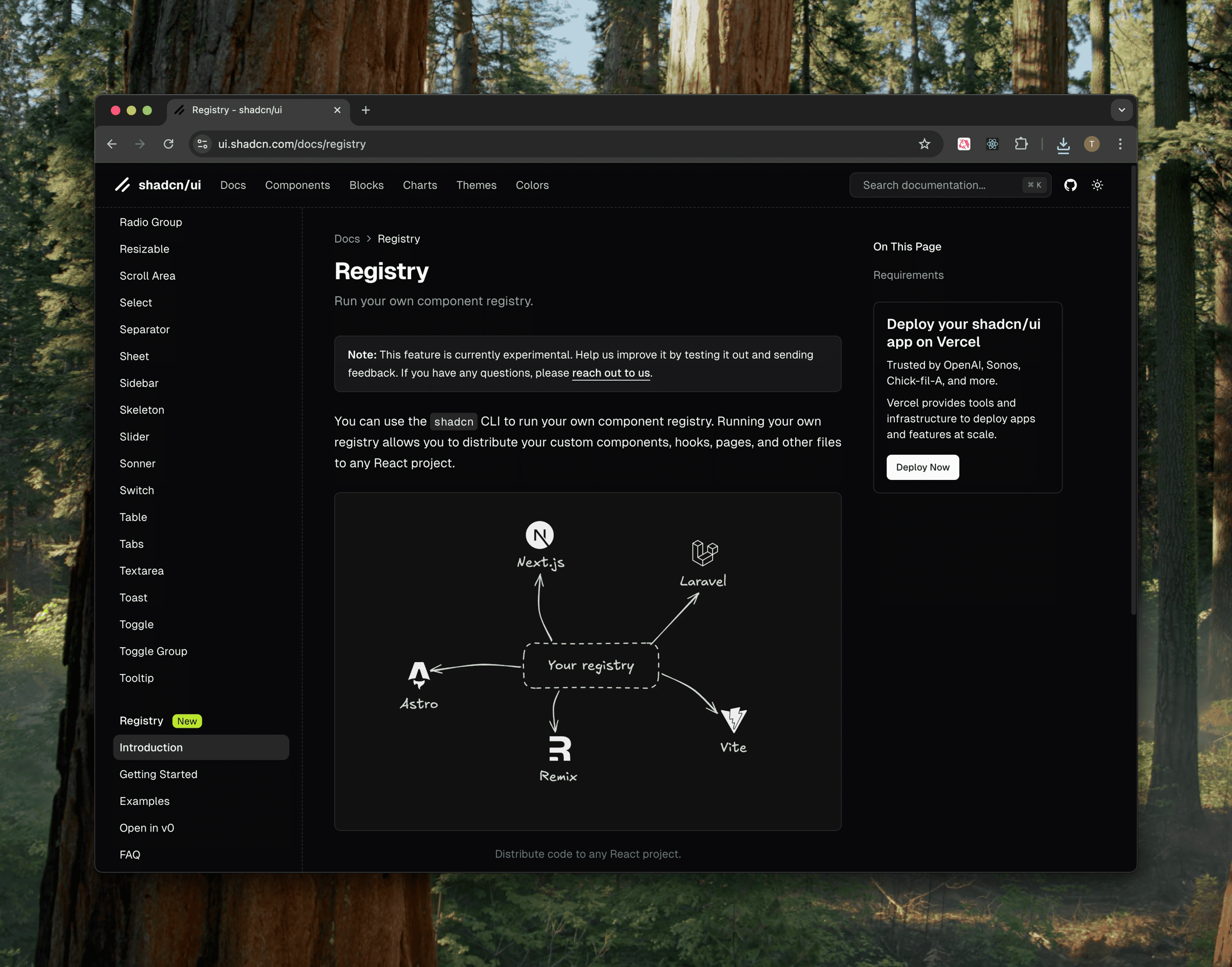

With the public release of shadcn/ui's registry mechanism, we felt like this was the perfect time to explore this new paradigm for vibecoding → production. As a quick recap, the shadcn registry model reimagines how UI components are shared and consumed—not as rigid packages requiring complex versioning, but as a flexible collection of source code that developers can directly install, customize, and own.

This approach eliminates the traditional overhead of package management while preserving the benefits of reusable components. Inspired by this, we began exploring how creating our own registry could improve our current design-development workflow, providing a standardized foundation that both humans and AI tools could understand and manipulate.

The most compelling aspect of this approach wasn't just about how components are distributed—it was about creating a shared language that AI tools could understand and manipulate.

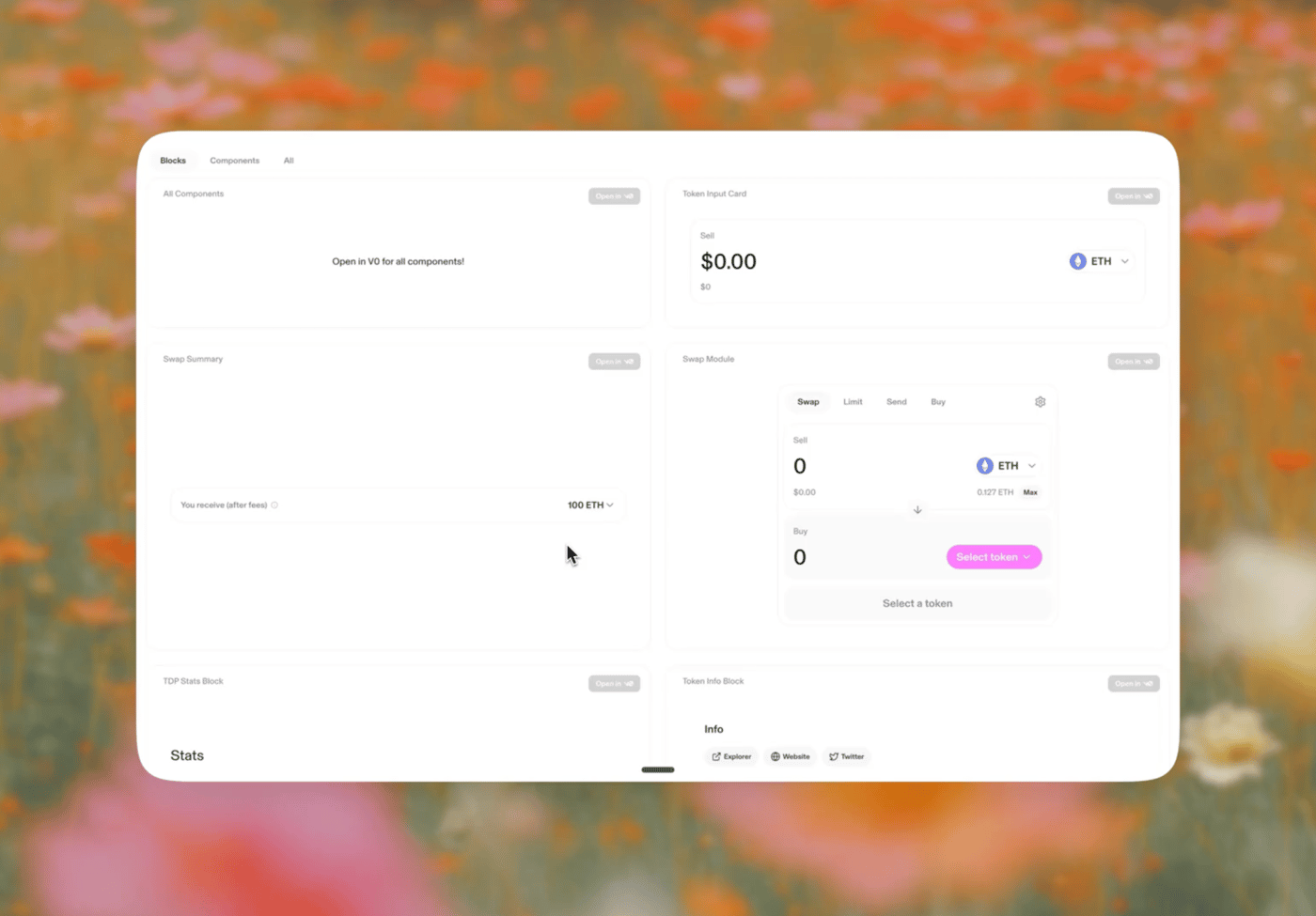

The real magic happens when this approach meets tools like v0 (v0.dev). The registry becomes a vocabulary that AI-powered design tools can draw from, remix, and implement directly.

Think of it like this: traditional component libraries are like books on a shelf—you can read them but not easily modify their content. The registry approach turns components into a language that tools like v0 can speak fluently, enabling them to compose, remix, and adapt components on the fly.

This is what makes the difference: v0 can pull components from the registry, understand their structure and purpose, and use them to quickly prototype new interfaces that maintain design consistency. When a designer wants to explore a new idea, v0 doesn't start from scratch—it speaks the same component language our production code uses.

For us at Uniswap, this wasn't about simply copying components into our codebase (though that's useful too). The revelation was creating this standardized language that enables new workflows between design exploration and implementation—a shared vocabulary that both AI tools and humans can understand.

Teaching models about design taste

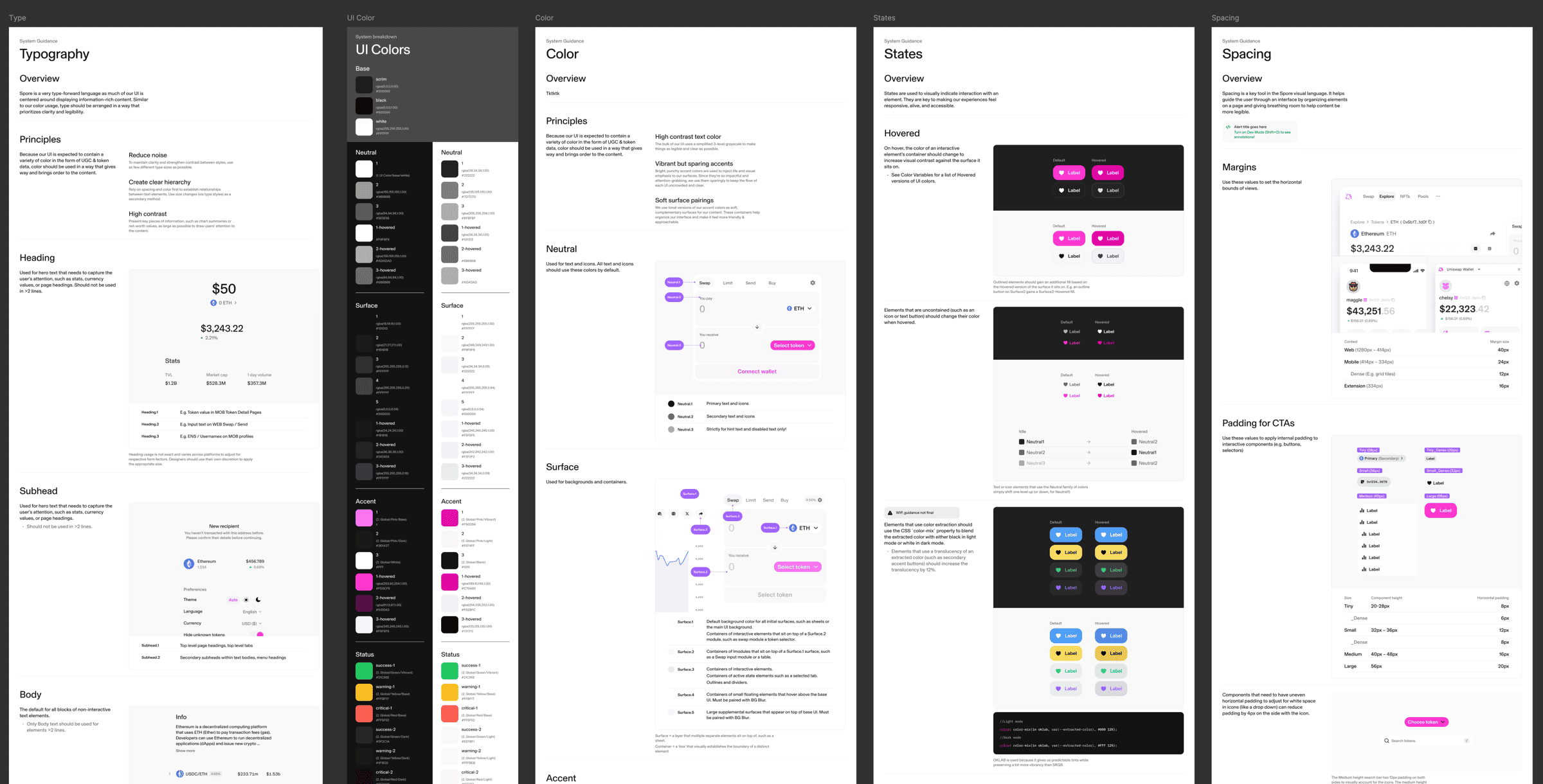

During this project we explored another aspect of this new set of tooling: teaching our AI tools about design intent.

Design systems typically focus on components and patterns, but great design depends equally on implicit knowledge—the unwritten rules, aesthetic sensibilities, and taste that experienced designers apply instinctively. This is often what gets lost in traditional handoffs.

We transformed Uniswap's Figma design guidelines—spacing conventions, color patterns, typography rules—into specific instructions for Cursor via project rules. When asked to create a component, it doesn't generate generic code; it builds something that genuinely feels like a Uniswap component.

The difference compared to generic prompts is significant. Components generated through these rules achieve approximately 90-95% accuracy on the first pass (aka one shotting it), requiring only minimal adjustments for production readiness.

Figma as a translator

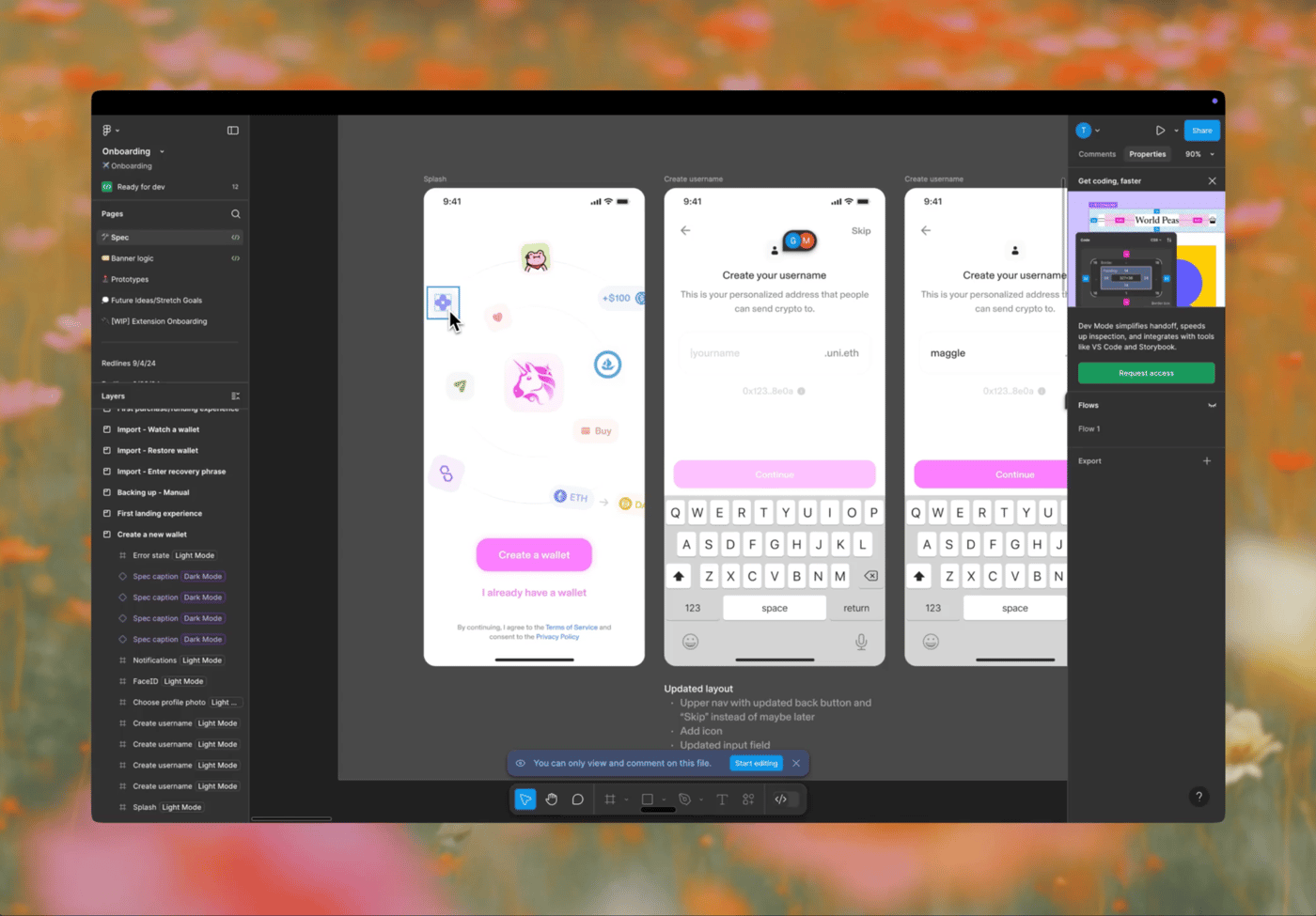

We also used a Figma Model Context Protocol (MCP) almost as an agentic auto-translator of our designs to code. MCP acts like a standardized connector for AI applications, enabling different tools to provide structured context to AI models. This creates a direct pipeline between our design system and implementation tools.

With this MCP, our Figma designs become more than visual references—they become structured data that AI tools can interpret with precision. The design intent captured in Figma flows directly to our implementation tools without the traditional handoff friction.

This creates a seamless chain from design exploration to implementation:

Designers work in Figma, capturing their intent

Figma MCP exposes this structured data to AI tools

AI tools like Cursor/v0 generate registry-compatible implementations

The resulting code preserves the original design intent

The ideal process

Our experiments have revealed a workflow that preserves design intent while eliminating most translation loss:

Designers explore initial concepts in Figma (leveraging its visual exploration strengths)

These concepts move to Figma MCP for structured context sharing

Cursor/v0 generates implementations using registry components

Designers make direct adjustments to the implementation

Developers focus on complex logic, data fetching, and performance optimization

The result is production-ready code, not a throwaway prototype

Democratizing production-quality code

The most profound impact of this approach—and what I'm most excited for—is how it changes who can contribute to the codebase. The traditional boundary between designer and developer becomes more permeable in the most productive way.

When prototype code can become production code, designers naturally begin thinking differently about implementation constraints. When components come pre-endowed with design intent, developers gain deeper appreciation for design decisions. The result is a more collaborative, efficient process that yields higher quality outcomes.

This doesn't mean designers must become expert coders or engineers must become expert designers. Rather, it means both groups can contribute more meaningfully to each other's domains without requiring full expertise in both.

Taste as the fundamental primitive

Over the years, one thing has become increasingly clear to me: taste is the fundamental primitive we're working with in product development—not wireframes, not code, not components. The tools we use—Figma canvases or code editors—are merely mechanisms for expressing this underlying aesthetic intelligence.

What matters isn't whether something was born in a design tool or crafted directly in code, but whether it embodies the deliberate decisions and sensitivity that distinguish the exceptional from the adequate. The medium is secondary to the message.

This perspective changes how I'm thinking about the pragmatic challenges we faced:

Components become vessels for taste: Our components need to just work across platforms while looking amazing by default. All the states, all the accessibility requirements, all the platform quirks—handled. When typography and spacing feel right without tweaking, we're not just saving time—we're making sure design decisions survive the journey from concept to product.

Designer-developer boundaries dissolve: Designers don't need to become engineers, but they do need enough code fluency to play in this new space. And our components have to respect platform differences—web should feel web-native, mobile should feel like proper mobile. When it works, designers express their taste directly through new tools, and developers become amplifiers of that taste rather than translators.

Quality is taste made concrete: How do we keep standards high as things evolve? How do we make sure components are fast without sacrificing beauty? These aren't separate technical concerns—they're about creating feedback loops that protect taste across the entire product lifecycle.

What excites me most about this approach is that it creates a direct pipeline for taste—from initial concept all the way to final implementation—without the degradation that happens when taste has to survive multiple translations between disciplines. When designers and developers share the same primitive (taste) expressed through compatible mediums (the registry), we might finally close the gap between what we envision and what we ship.

What's next: moving beyond the hackathon

There are so many fun and cool tools that have converged to make this approach possible:

Shadcn registries providing flexible, ownable component distribution

Cross-platform styling that works across web and native

AI tools that understand and generate code within design contexts

Design tools with MCP integration for structured data sharing

The hackathon was just a starting point. Now we're running small experiments to see if this works in the real world. We're building more registry components, tweaking our AI instructions based on what the team is learning, and figuring out what it actually looks like to prototype directly in code as part of our design process.

What I've been happiest to see is the enthusiasm from our design team. They're eager to prototype new ideas immediately and get them in front of stakeholders. When a designer can communicate their full vision—including interactions, animations, and edge cases—without translation loss, design reviews become more productive. Everyone can focus on the actual experience rather than implementation details. Designers aren't asking "can we build this?" but "should we build this?"

The future isn't about better handoffs—it's about no handoffs at all. It's about tools that work with our creativity rather than forcing us to constantly translate between disciplines. When we get this right, prototypes and production aren't separate things with a gap to bridge. They're just different expressions of the same underlying vision. That's a future I want to help build.